Fairness

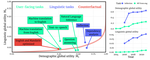

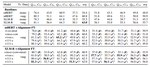

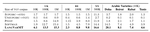

Advances in natural language processing (NLP) technology now make it possible to perform many tasks through natural language or over natural language data – automatic systems can answer questions, perform web search, or command our computers to perform specific tasks. However, “language” is not monolithic; people vary in the language they speak, the dialect they use, the relative ease with which they produce language, or the words they choose with which to express themselves. In benchmarking of NLP systems however, this linguistic variety is generally unattested. Most commonly tasks are formulated using canonical American English, designed with little regard for whether systems will work on language of any other variety. In this work we ask a simple question: can we measure the extent to which the diversity of language that we use affects the quality of results that we can expect from language technology systems? This will allow for the development and deployment of fair accuracy measures for a variety of tasks regarding language technology, encouraging advances in the state of the art in these technologies to focus on all, not just a select few. Funded by Amazon and the NSF through the NSF-FAI program.

Antonios Anastasopoulos

Assistant Professor

I work on multilingual models, machine translation, speech recognition, and NLP for under-served languages.

Related

- Global Voices, Local Biases: Socio-Cultural Prejudices across Languages

- Are Large Language Models Geospatially Knowledgeable?

- Geographic and Geopolitical Biases of Language Models

- Phylogeny-Inspired Adaptation of Multilingual Models to New Languages

- The GMU System Submission for the SUMEval 2022 Shared Task